LLMs in Healthcare

Introduction

Large Language Models (LLMs) are AI systems trained on massive collections of text to understand and

generate human-like language. In healthcare, they are increasingly used as "copilots" to help clinicians

and teams work faster with clinical text, guidelines, and documentation.

LLMs are especially useful where information is complex, unstructured, and time-consuming to read or

write: progress notes, discharge summaries, radiology reports, referral letters, prior authorizations, and

patient education materials.

Important: LLMs can produce confident but incorrect statements ("hallucinations"). Safe healthcare

deployment requires strong governance: human review, audit logs, secure data handling, and clinical

validation.

How LLMs are being used in healthcare

Clinical documentation

• Drafting SOAP notes, discharge summaries, referral letters, and operative notes from clinician

prompts.

• Formatting notes into structured templates (problem list, medications, allergies).

• Reducing administrative burden when integrated with EHR workflows.

EHR summarization and chart review

• Summarizing long patient histories into timelines (diagnoses, labs, imaging, procedures).

• Flagging missing context for follow-up (e.g., pending labs, overdue screenings).

Clinical decision support (assistive)

• Retrieving guideline snippets and presenting differential diagnoses or next-step considerations.

• Generating checklists for care pathways (sepsis bundle, stroke workup) with citations to local protocols.

Patient communication and education

• Creating patient-friendly explanations (discharge instructions, medication education) in local

languages.

• Conversational triage assistants (with careful safety guardrails and escalation).

Medical coding and billing support

Page 3

• Suggesting ICD/CPT codes from clinical notes; highlighting missing documentation for coding

completeness.

• Automating prior authorization drafts and payer-specific forms.

Research and pharmacovigilance

• Literature summarization, evidence extraction, and drafting systematic review notes.

• Monitoring safety narratives (adverse event reports) and clustering similar cases.

Operations and quality

• Summarizing incident reports, generating root-cause analysis drafts, and identifying process patterns.

• Helping build SOPs, training materials, and policy documents.

10 models (9 LLM families + 1 Medical ASR system)

1) GPT-4 / GPT-4o (OpenAI)

General-purpose frontier LLMs (some variants are multimodal). Often used for documentation,

summarization, and patient communication with appropriate safety layers.

Typical strengths

• Strong reasoning and writing quality

• Useful for summarization, structured extraction, and workflow automation

• Enterprise deployments can support compliance controls

Key cautions

• Not inherently medical-specialized; needs prompt/guardrails and clinical validation

• Risk of hallucinations; must include human review

2) Gemini / MedLM (Google)

Gemini is Google’s LLM family; MedLM refers to healthcare-oriented offerings intended for clinical

workflows and medical text tasks.

Typical strengths

• Good integration potential with Google Cloud and healthcare data services

• Useful for EHR summarization and clinical documentation assistance

Key cautions

• Access may be enterprise-focused

• Still requires governance and validation

3) Claude (Anthropic)

A general-purpose LLM family known for strong long-context handling and emphasis on safety behavior.

Typical strengths

• Good for long medical documents (policies, charts, research PDFs)

• Often used for compliance writing and policy drafting

Key cautions

• Not a medical model by default

• Outputs still require verification

4) Llama 3 (Meta)

Open-weights LLM family that organizations can fine-tune and deploy on-prem or in private clouds.

Typical strengths

• Flexible for custom healthcare assistants

• Supports domain fine-tuning and local deployment

Key cautions

• Fine-tuning and hosting require engineering and MLOps

• Quality depends on tuning data and evaluation

5) Mistral (Mistral AI)

Efficient LLMs designed for strong performance with smaller footprints; used in multilingual and enterprise

use cases.

Typical strengths

• Good efficiency and deployment flexibility

• Often chosen for multilingual assistants

Key cautions

• Not healthcare-specialized by default

• Needs evaluation on clinical tasks

6) Falcon (Technology Innovation Institute)

Open-weights LLM family sometimes chosen for on-prem deployments and customization.

Typical strengths

• Can be deployed locally for data control

• Fine-tunable for specific workflows

Key cautions

• Model quality varies by version and tuning

• Requires strong evaluation to avoid unsafe outputs

7) Med-PaLM 2 (Google Research)

A medically oriented research model focused on medical question answering and clinical reasoning

benchmarks.

Typical strengths

• Designed for medical Q&A; and reasoning tasks

• Helpful reference point in medical LLM research

Key cautions

• Often limited public access

• Not a complete product by itself; still needs workflow integration

8) BioGPT (Microsoft Research)

Biomedical language model trained on scientific and biomedical literature, useful for research-heavy text

tasks.

Typical strengths

• Strong for biomedical literature generation and extraction

• Useful for drug/target/relation extraction in research contexts

Key cautions

• Less suited for conversational patient-facing use

• May need additional tuning for clinical notes

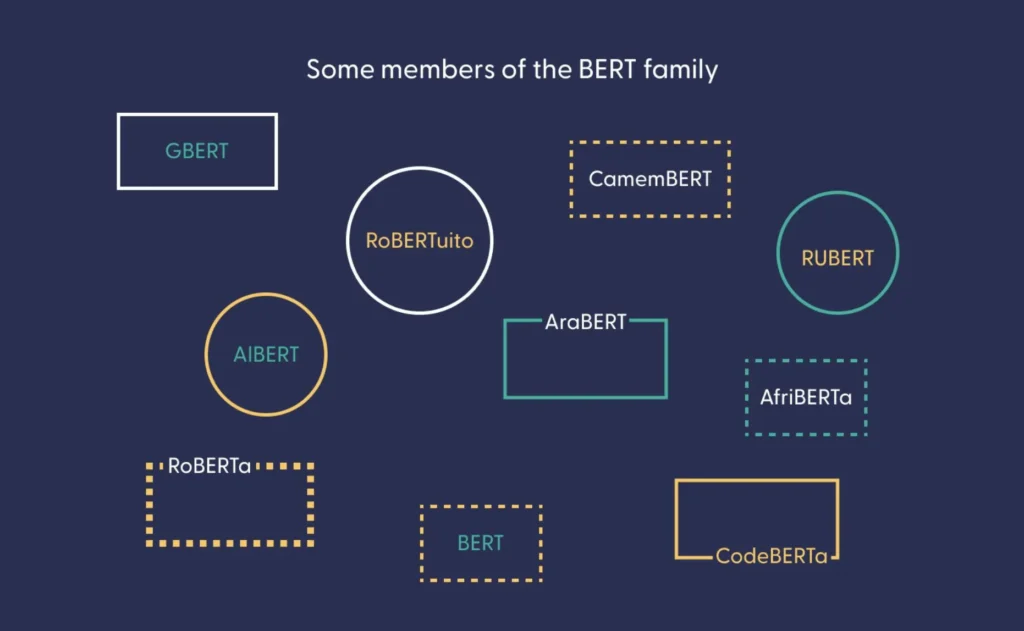

9) ClinicalBERT (clinical NLP family)

BERT-style models trained on clinical notes (e.g., ICU notes). Often used for classification and extraction

rather than open-ended generation.

Typical strengths

• Strong for EHR NLP tasks: entity extraction, risk prediction features

• More predictable behavior for specific classification tasks

Key cautions

• Not an instruction-following chat model by default

• May require pipelines + additional models for generation

10) Medical ASR (Speech-to-Text) + LLM layer ("Med ASR")

Not an LLM by itself, but a critical partner in healthcare workflows: Medical Automatic Speech Recognition

converts clinician speech into text, then an LLM can structure it into notes, orders, and summaries.

Typical strengths

• Enables ambient documentation (doctor-patient conversation to notes)

• Improves speed of note creation and reduces manual typing

• Works well when paired with templates and clinical vocabulary

Key cautions

• ASR errors can propagate into notes; needs correction workflow

• Privacy controls are essential because raw audio is sensitive

Conclusion

Large Language Models (LLMs) are transforming healthcare by improving clinical documentation, summarization, research, and patient communication. While they enhance efficiency and reduce administrative workload, they are assistive tools—not replacements for clinicians. Safe implementation requires human review, strong governance, data security, and regulatory compliance. When deployed responsibly, LLMs can act as powerful copilots that support better healthcare workflows and outcome